Evaluate

The evaluation process was an incredibly productive period, where we gained incredible insights from an outside perspective. Being so up close to our own project, it is easy to overlook its faults or haziness. We have the contextual background of what our goals are to fill in gaps. It was an invaluable process of talking with people who are not working on our project to see the weaknesses or strengths that we may overlook. In this blog, I will outline our process of evaluation preparation and then the evaluation process itself.

Our group decided that we wanted to dedicate most of our evaluation process to the time spent on the prototype itself. However, we identified a need for a contextual background to have the correct perspective to approach our prototype with our feedback group. We understood that it is incredibly likely that our feedback group could be consumers of Disney+. Still, our persona encapsulated both a user of Disney+ personally and Disney+ as a parent. To solve this, we created a presentation that would guide the experience and let our users interact with the prototype directly.

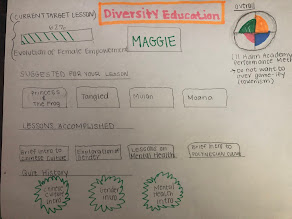

The presentation very briefly described our persona, Maggie, so our feedback group was empathetic to our approach to satisfy Maggie. For context, Maggie is a millennial mom of two, self-proclaimed nerd who enjoys Marvel and Lucas Films, and values inclusivity in her communities. By informing our feedback group about Maggie, they were encouraged to expand their opinions beyond how they might interact with the product and to imagine how Maggie, both a personal and parental user, might interact with the concept. We then briefly described the process of using the prototype.

When planning our prototype evaluation phase, one point that was brought up was how much direction we wanted to give our feedback group. We wanted to provide them with enough of an understanding to operate the prototype meaningfully. However, we did not want to give them too much direction to prime them for an idealized experience—the tension between under informing and overinforming sparked an interesting dialogue in our group. We wanted honest feedback. If there were points of confusion, we did not want them to have the information to gap it themselves. We wanted them to articulate it.

From there, we went to allowing our feedback group to directly use our prototype. We casually made suggestions throughout the time we allocated for the user experience.

We then progressed to the evaluation discussion phase. We had our feedback group go to a shared Jam Board and answer the overarching questions we posed. In the preparation, we identified questions that we sought to answer about our prototype. After reviewing them with Professor Luchs, he suggested that they were too specific and needed to simplify our questions. In reflection, the questions, while necessary, were too specific to ask from the start. We switched to utilizing the feedback grid that asked broader questions about our concept, like points of confusion or persisting questions. These questions facilitated easier conversation, and from some of the shared insights, we were able to get to the questions that we initially hoped to answer.

As for the evaluation feedback itself, we gained meaningful insights into questions we hoped to answer. For one, we had a concern about our working concept title "Diversity Education mode". We did not want to suggest that this mode would capture all you could hope to in learning about diversity; it was more of a mechanism to promote and inspire inclusivity across communities. Our fear in developing our concept would be that it would promote disingenuous engagement with learning about diversity, namely manifesting itself as performative or tokenism. After meeting with our feedback group, we identified that we should rename it with words that prioritize education and inclusivity, as opposed to diversity. They confirmed our suspicions.

Another issue that we wanted to solve when meeting with our feedback group was the debate of gamifying these lessons. We wanted to incentivize learning about diversity, but we did not want to create something like "diversity points". Again, we were worried about diminishing the importance of this very important focus; gamifying seemed like it could quickly slip into being inappropriate. Our feedback group was mixed in responses. On one hand, some people articulated that this could be a slippery slope, that may diminish the seriousness of very serious issues. On the other hand, some people articulated that incentivizing learning through points is productive for children and consistent with the Disney brand. One suggestion was to include games across the platform, not just within the lens of inclusivity.

Another point that we asked about was the disruption to the flow. Our feedback group offered that they believed the pop-up information throughout the content was not a hindrance, but an enhancement. Other suggestions offered were features we had already designed into our concept; perhaps, this seems to suggest that they were not initially apparent. This can fuel subsequent refining of our prototype to more clearly present these features.

Overall, this was a very productive feedback session. We were given a lot of information to consider when approaching how to refine our concept. Namely, it would be to pivot in what we were referring to it as, given that "Diversity Education Mode" does not seem appropriate. Additionally, when we saw suggestions from the feedback group on features that were already a part of the prototype, we realized we needed to present these features more clearly. Given this feedback, we are on a good path moving forward to best refine our product to meet user suggestions and preferences.

Comments

Post a Comment